From Heroes to Zeroes: My Amazon ML Challenge 2024 Experience.

Ambition is possibly the best fuel that propels the mind. But sometimes, soaring on ambition alone is dangerous. Especially if you soar too close to the sun.

It was quite an exciting day when me and my teammates Karan Bhatia and Vedika Walhe started to plan for the solution to be submitted for the Amazon ML Challenge this year. The challenge was simple: develop a machine learning model that can accurately extract the weight, height, volume and other properties of an item, given its photo. Right away, we began planning and downloading that absolutely humongous dataset — who knew how distraught we’d be, only days later as the deadline drew close.

In the end, we lost terribly… Having only 1,000 outputs processed of more than 130,000 with an F1 score of just 0.00016, a number which has three more zeroes than I want to see.

Our planning was impeccable, and both our methodology and code were flawless.

Not to mention, we had our coffee rationed.

Then where did we go wrong?

(Oh boy, here we go.)

1. Going Multiple Ways

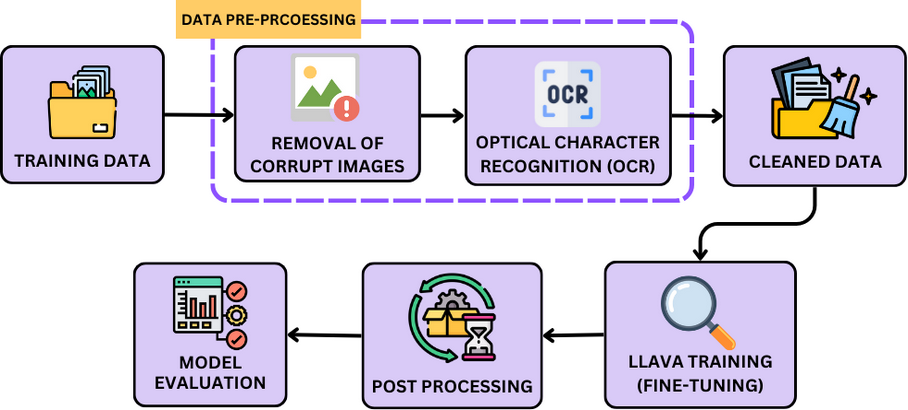

In the beginning, we were pretty confident that the best way to tackle this problem was using Optical Character Recognition (OCR) along with either regular expressions, NLP, or an LLM to process the text generated by the OCR. Then suddenly in a stroke of genius, one of us had the idea of using a multimodal LLM. Without going into too much depth, simply put, we fine-tuned LLaVA on 40GB worth of images and text labels, to read images and responded with the required values mentioned in the prompt provided.

Eventually, we made the following flow (courtesy of Vedika):

But this is where we made a fatal mistake… By trying to make a model that uses OCR, and another one that uses LLaVA. Obviously, who would want to bet on the multimodal LLM idea? It sounded too good to be true. But guess what? Some of the top teams did use multimodal LLMs.

We had the magnitude of ambition and enthusiasm, but a vector cannot have two directions. And thus the magnitude of our skills was split into two. That was just the first nail we hit on the coffin of our solution.

There are two ways the human mind thinks: quickly and intuitively, or slowly but analytically. We let our intuition grow too high, too close to the sun. And then time ticked away, and our hopes simply burned out. Although the reason for that could’ve been the heat from our GPUs.

2. Lack of Resources

Even after the initial blunder, our code was ready by the end of day 2. That meant that we had two entire days to do our thing. Then how did we lose so badly? The reason is quite simple: I did not choose our resources wisely.

After we cleaned the data, I was initially overlooking the fine-tuning of the model. I began pretty optimistically, but we hit a snag instantly — running out of GPU memory. We were training on Kaggle, on which the maximum memory you could have was 30GB (2 T4s, with 15 gigs each), and that was still not enough. Even talking to my rubber duck didn’t help much.

After implementing bit quantization and making some more sacrifices on precision, we finally were able to fine-tune the model. As Karan quite bravely watched over the fine-tuning overnight and I shamelessly fell asleep (hey, I was tired), Vedika had already begun testing the code that was actually to be used in this model. Night shifted into day as they carried on varied atrocities on our respective keyboards.

Somehow, we fine-tuned the model with only 7 hours remaining till the deadline. I had woken up and taken the responsibility of getting the outputs finally to give my hard-working teammates a break, now that I was fresh and they looked like a pair of depressed pandas. But obviously, things did not go as intended. More GPU crashes, a few memory leaks, and a lot of questioning my life choices later, I finally got the model to start generating outputs.

Only 1 hour was left when we finished 1000 rows. Out of 136,000 rows. No amount of coffee could console me, at that point.

How did this happen? And how did the other teams fare so well then?

Well, the answer may sound rather cocky, but we simply did not have the same resources as the people at tier 1 and tier 2 colleges do.

But that is only half of the reason. The blame is on us too. We could’ve used products like Lightning AI or somehow farmed from multiple cloud GPUs, by hook or crook. Nothing in this world is fair, and I do not blame the brilliant minds working at IITs and other great institutes at all.

At the end, this was our fault. A planning fallacy.

3. Priorities

At the end of the day, we’re undergraduates, all three of us. Our weekend might’ve been busy with the hackathon but that would not stop the academic machinery of our colleges from absolutely devouring us, as submissions, assignments and project work filled our plates. Due to the same, the flames of our ambitions were quickly quenched by a rain of deadlines. To be brash about it, we half-assed this.

Maybe we had our priorities wrong. Maybe we would have lost either way. Who knows? But I do acknowledge that if we had handled academia ahead of time and focused on the hackathon, we could have had an F1 score that exceeds the chance of this blog going viral, at least.

4. …Following the rules

You read that right. This is not exactly a valid reason but… we could’ve cut our losses and just used a multimodal LLM without fine-tuning it, like the team that came 15th did, according to this Linkedin post.

Okay, seriously. I don’t even know if that’s cheating. It was never clearly mentioned that using the dataset was necessary. Pretty nifty, but let’s hope these guys pass further scrutiny, if any happens at all.

To Conclude

We lost terribly, and I won’t sugarcoat it by saying that this was a learning moment for us. We were very much lost in disappointment, and tired from four days of efforts and sleepless nights - although I did cheat in that department - leading to nearly nothing in the end.

It is, however, something to learn from for you reading this. Budding engineers are inherently bad at management, and ambition is nothing if you cannot channel it. Ambition is also something that we have. Even the brightest mind can be derailed by a lack of focus and a misguided sense of ambition. It is just that discipline and planning, beats ambition and talent by a long shot.

We may have lost the Amazon ML Challenge, but we did increase our caffeine tolerance by twenty times… And more importantly, we will be more prepared next time. With enough coffee, lots of determination, and better management and planning, who knows, maybe we will win big time soon?